Why the IEA's "Energy & AI" Report Misses the Forest for the Trees

The International Energy Agency's report dated April 2025 masterfully explains how to power an AI boom—but sidesteps the crucial question of the conditions under which that may make sense.

The IEA's April 2025 report "Energy & AI" reads like a technical manual for accommodating inevitable growth. Data center electricity demand will more than double to ~945 TWh by 2030, it tells us. Renewables will meet roughly half the incremental demand. Small modular reactors will come online around 2030. Grid constraints will delay about 20% of planned capacity.

These projections are presented with the clinical precision we've come to expect from the IEA—detailed supply curves, technology deployment scenarios, and infrastructure requirements mapped out with impressive granularity. But something fundamental is missing from this 200-page analysis: any serious grappling with why this trajectory deserves our scarce resources, or whether it aligns with genuine human priorities.

The legitimacy gap

The report's headline figure—that data centers currently consume ~1.5% of global electricity—obscures more than it reveals. This seemingly modest percentage masks acute local impacts and says nothing about opportunity costs. When we dedicate enormous quantities of clean electricity to training the latest large language model, what health clinics, electric vehicle charging networks, or industrial decarbonization projects go without power?

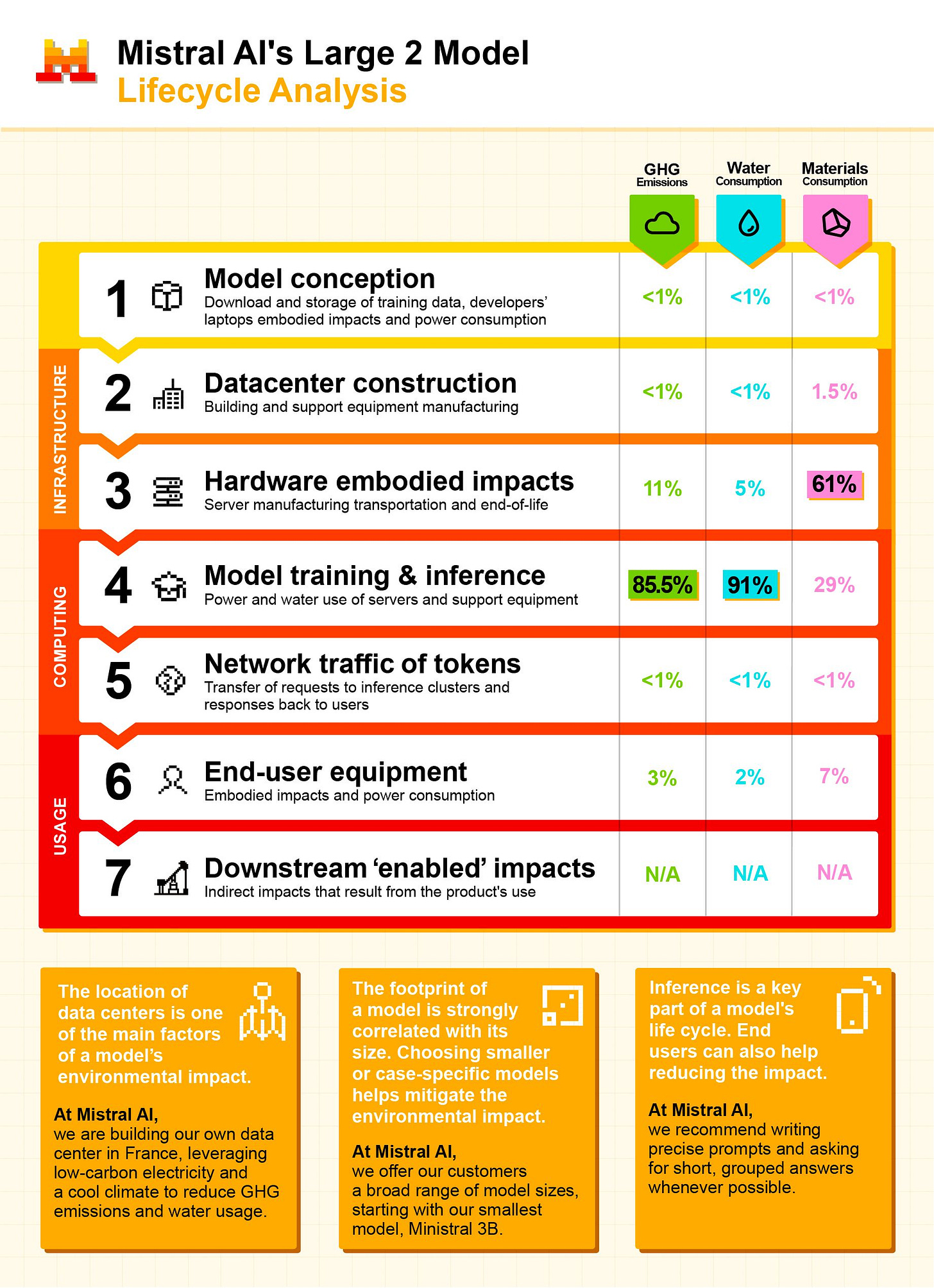

More critically, the IEA's electricity-focused lens misses the broader environmental picture entirely. Mistral AI's groundbreaking lifecycle assessment, published in collaboration with Carbone 4 and France's ecological transition agency (ADEME), reveals the true scale of AI's resource footprint. Training their Large 2 model consumed 20,400 tonnes of CO₂ equivalent, 281,000 cubic meters of water, and 660 kg of scarce materials (antimony equivalent)—impacts that go far beyond the electricity meter.

These "upstream emissions" from manufacturing servers, mining rare earth elements, and cooling systems represent a substantial portion of AI's total environmental burden. Yet the IEA's analysis treats electricity consumption as if it operates in isolation from these material and water impacts.

The IEA treats AI electricity demand as weather—something to be predicted and accommodated rather than evaluated and potentially redirected. This technocratic framing reflects a deeper intellectual failure: confusing what can be built with what should be built.

Consider the report's assumption that current growth trajectories will continue largely unabated. It models scenarios where data center demand reaches ~1,200 TWh by 2035—nearly triple today's levels. But these projections rest on questionable premises: that compute-intensive applications will continue expanding regardless of social utility, that efficiency gains won't meaningfully slow demand growth, and that society will indefinitely prioritize AI scale over other pressing electrification needs.

More troubling is what the analysis omits entirely. There's:

no framework for distinguishing between AI applications that serve fundamental human needs and those that don't. Fundamental human needs have best been studied in Manfred Max-Neef’s work, esp. the human development framework (see this page on Wikipedia for a summary, but also read Max-Neef’s work)

no acknowledgment that we're operating within planetary boundaries that constrain how much energy we can responsibly consume and therefore imply that we need to prioritise the use of renewable energy and eliminate the need for fossil fuels.

The four-gate test for energy allocation to AI

The goal is ensuring each marginal kilowatt-hour—and each kilogram of scarce materials—spent on AI delivers more health, safety, resilience, and decarbonization than it consumes. I propose a four-gate test that any major AI facility should pass before plugging into the grid:

Gate 1: additional, hourly-matched clean power New AI load must be met by genuinely additional low-emissions supply, matched hour by hour. Annual renewable energy credits don't count—we need 24/7 clean firm electricity that doesn't cannibalize clean power needed elsewhere. This means campus-level clean energy disclosure, power purchase agreements that clearly add capacity to the same grid, and firm supply arrangements consistent with decarbonization trajectories.

Gate 2: non-crowding of local grids The IEA acknowledges that ~20% of planned data center capacity faces grid constraint delays, yet doesn't interrogate what this means for competing priorities. Projects should strengthen local grids where they land—not strain them. This requires independent interconnection studies showing no net degradation of local reliability, plus funded grid-benefit packages (dynamic line rating, flexibility services, storage) sized to the project's peak load.

Gate 3: social-utility commitment Here's where we separate genuinely valuable AI from digital junk food. Dedicate a meaningful share of compute capacity (≥30-50%) to workloads with high human-needs value: grid reliability, healthcare, building efficiency, disaster response. The IEA's own data shows AI can reduce power outage duration by 30-50% and improve grid stability—this is the kind of measurable public value to prioritize.

Gate 4: full-lifecycle stewardship the Mistral AI study makes this gate especially urgent. Electricity-related emissions are just one piece of AI's environmental puzzle. The 660 kg of scarce materials needed to train a single large model, the 281,000 cubic meters of water consumed, and the massive upstream emissions from hardware manufacturing demand comprehensive stewardship commitments.

Operators should commit to longer server lifetimes, refurbishment programs, high-recovery recycling of critical materials, and waste heat reuse where feasible. Liquid cooling increasingly enables 40-80°C thermal flows suitable for district heating—often cheaper than fossil combined heat and power. Given that material depletion accounts for a significant portion of AI's total impact, circular economy principles aren't optional—they're essential.

Why this matters now?

The AI industry's favorite defense—"it's only 1-3% of global electricity"—crumbles under scrutiny. Mistral AI's recent data shows this percentage dramatically understates AI's true environmental burden when you include water consumption, material depletion, and upstream emissions. Local impacts matter enormously, and that percentage is growing fast. More fundamentally, this framing treats electricity as if it's abundant rather than precious.

The lifecycle assessment also reveals a stark correlation between model size and environmental impact—impacts scale roughly proportionally with model parameters. This underscores the importance of matching model scale to actual use cases rather than building ever-larger systems as a matter of course.

We're living through the most important energy transition in human history, racing to decarbonize before climate breakdown becomes irreversible. Every kilowatt-hour matters. Every kilogram of rare earth elements matters. Every cubic meter of freshwater matters. Every siting decision shapes whether communities get reliable clean power or watch AI facilities consume the renewable energy meant for their schools and hospitals.

The IEA's report provides essential technical groundwork for this transition. But technical feasibility isn't ethical legitimacy. Just because we can build massive AI infrastructure doesn't mean we should—not without first asking whether it serves genuine human flourishing better than the alternatives.

The four-gate test offers a practical framework for making these choices responsibly. It's not anti-innovation—it's pro-prioritization. It rewards efficient models, flexible operations, and high-utility applications while screening out digital waste.

As AI reshapes our energy landscape, we need policies that put human needs and planetary limits ahead of undifferentiated scale. The stakes are too high for anything less.