Embracing AI in Education

Fostering thoughtful adoption of AI in education is an imperative for fair access and appropriate use of AI. Attempting a ban on AI tools would be profoundly misguided and ethically questionnable.

As AI transforms education, educators face an ethical dilemma: How can we ensure AI enhances learning without undermining academic integrity or inadvertently punishing students? Can we responsibly incorporate AI while avoiding unreliable detection methods that might unjustly penalize students?

Educators stand at a critical juncture in the age of AI, tasked with preparing students for a world where artificial intelligence will be integral to personal, academic, and professional growth.

While AI offers immense potential for personalizing learning and fostering deeper understanding, its role in education also raises concerns around academic integrity and fairness. As detection technologies struggle to reliably identify AI-generated content, the focus may need to shift from restriction to responsible integration and ethical use.

AI and learning goals and activities

“Learning is the process of forming an internal model of the outside world”

– Stanislas Dehaene

Learning activities made easier by AI

In recent years, AI-enabled tools have significantly enhanced students' ability to conduct research, manage data, and produce insightful, well-organized work. By streamlining and augmenting complex academic tasks, these tools have the potential to enable students to focus on deeper learning, critical thinking, and synthesis of information across diverse sources and formats.

These tools have the potential to enable students to focus on deeper learning, critical thinking, and synthesis of information across diverse sources and formats

AI-driven platforms are transforming how learners approach foundational and advanced tasks. Consider the following tasks that AI-enabled tools make much easier:

identification of sources of data for research and other activities through standard or AI-augmented search engines (keeping in mind that “standard” search engines already used advanced algorithms and machine learning), with Semantic Scholar or Google Scholar and, of course content from more traditional sources all of which will in time receive their AI enhancement: databases (e.g. PLOS One, PubMed, IEEE Xplore…), academic repositories, and open data portals.

managing references and extracting content from various sources, something that is achieved with tools like SciSpace, Jenni AI, NotebookLM or Afforai, which competes directly with tools widely used by researchers like Zotero or Medeley. This class of activities also has an important collaborative dimension in groups working on an academic project or research teams: annotating, tagging, and sharing references with team members.

summarizing the content of one or more sources across file types (PDF, HTML, DOC, XLS…) with platforms like Perplexity.ai or Chrome and Firefox extensions like Wiseone, offering some degree of integration with various language foundation models, cross-checking, cross-referencing and source citation features.

analyzing quantitative data in basic and not so basic ways, including exploratory data analysis and predictive applications, with GSheet add-ons like Coefficient or AnswerRocket that’s positioned as en enterprise grade AI assistant for analytics work, and of course all the AI extensions of more established analytics and data visualization tools like Qlik Sense or Tableau AI or the data crunching capabilities given to OpenAI’s platform and the augmentation of Google’s data analytics tools with proprietary AI models like Looker or AI-ready data processing solutions like BigQuery.

simulating possible outcomes from set situations to generate and analyse scenarios, e.g. with solutions like Prevedere, or AI-powered dynamic modeling tools such as Ansys SimAI or Qlik AutoML.

writing code from querying databases, extracting useful data from large data streams, analysing and modelling data, creating mock user interfaces, developing small applications or integrating tools, with solutions like Claude AI or Github Copilot.

achieving complex synthesis of a variety of sources and insights as done through successive questions and angles of exploration with tools like Perplexity, SciSpace or NotebookLM. Furthermore, iterative synthesis and triangulation across sources are important aspects that help students build a balanced understanding of diverse perspectives, with tools that facilitate critical evaluation of sources like Scite AI or (e.g., EvidenceHunt or Consensus)

augmenting the writing and content production using targeted content and output from all of the previous aspects, with standard conversational interfaces of language foundation models or tools dedicated to writing like Writefull, Grammarly or Paperguide. Here we could also mention second brain tools or platforms like Notion AI or Mem, and of course translation tools such as Deepl are also to be considered in this class of activities for work that needs to be presented in different languages often for domestic and international audiences.

Impact of AI on learning goals and activities

This section examines the ways AI tools influence key learning goals and activities. In the words of Stanislas Dehaene, a cognitive neuropsychologist and professor at the Collège de France and world class expert of human cognition and learning, learning is “the process of forming an internal model of the outside world”. In his book “How we learn”, he claims that learning functions like Bayesian probabilities in that prior hypotheses are revised through education, experience, and accumulated inferences. This involves fine-tuning the settings and rules that characterize our interior models. That’s not unlike the way AI models are shaped, although our brains are far superior when it comes to the consumption of energy per unit of learning.

Dehaene identifies the following four areas as “pillars of learning”:

Attention: This is the first condition for successful learning. Students must pay attention to what needs to be learned. Teachers should draw students' attention by using techniques like quizzing or adjusting their tone of voice.

Active Engagement: Passive listening is insufficient for effective learning. Students should actively engage with the material by asking questions, speculating about hypotheses, or performing experiments. This intellectual struggle helps implant new knowledge in the brain and memory.

Error Feedback: Making mistakes can be beneficial if understood and corrected. Feedback allows learners to move past errors and make adjustments. This process of prediction, error, and correction leads to successive adjustments that favor learning.

Consolidation: New information and skills must be consolidated for durable and automatic use. This involves repeated practice until mastery is achieved. Sleep plays an essential role in this consolidation process.

These pillars are all essential in pursuing the achievement of key goals in learning, which can be found in the tables below. They provide a summary of an analysis of the positive and negative impacts of AI on learning goals and activities, which are cross referenced to the stages or categories of Bloom’s taxonomy.

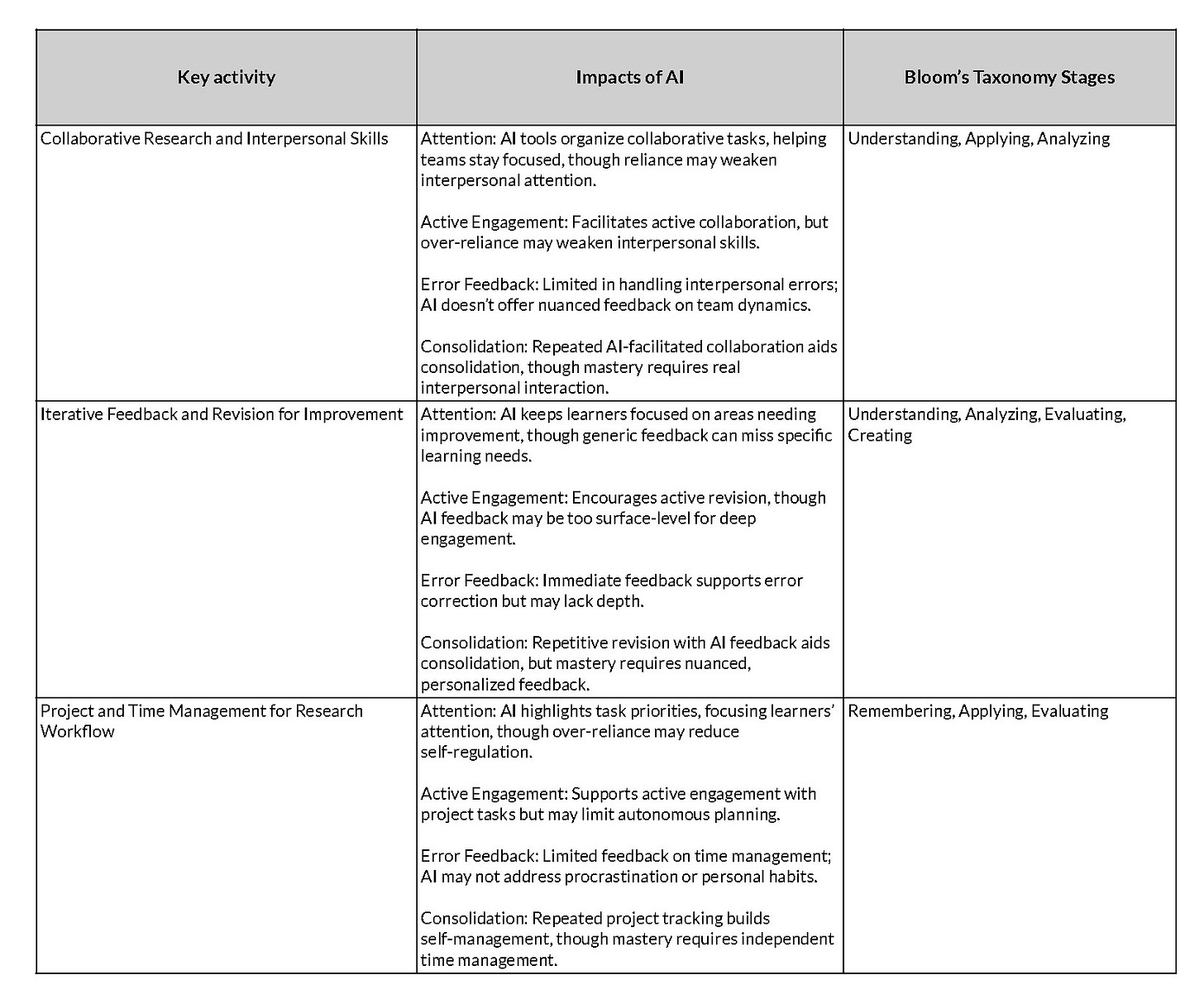

A similar analysis on key activities of the learning process is summarized in the tables below:

Human learning and AI

Human learning best practices

In his podcast episodes Dr. Andrew Huberman explores science-backed strategies to enhance studying and learning. He delves into neuroplasticity and effective learning protocols, such as the benefits of self-testing soon after exposure to new material, which boosts retention and understanding. Huberman discusses study habits that limit distractions, the importance of focus, sleep's role in consolidating memory, and tools like mindfulness and periodic testing to reinforce knowledge.

Obviously the topic of human learning is far too wide and deep to address here, but there are best practices in learning and therefore we can consider how AI can support or hinder them. We know the impact of regular, disciplined, habit-forming practices is both profound and positive for human learning; it invovles active engagement with content, self-testing quickly after exposure to new content and writing about a given topic. Huberman talks about an experiment that helped demonstrate the importance of testing soon after being exposed to new content. Here are his concluding remarks:

Based on what we now know about how we humans learn and what the recommended practices are in engaging with content as well as in ensuring good sleep and daily habits that reinforce learning, we can identify how AI can help and improve our learning and what the risks are.

How AI affects human learning

AI enhancing human learning

Enhanced Access to Information and Resources

AI-powered tools provide students with quick, organized access to vast amounts of information, helping them locate relevant resources and delve deeper into their studies. With AI’s ability to summarize, translate, and simplify complex information, students can engage with challenging material more easily, regardless of their background knowledge. This access is especially valuable for students in remote or underserved areas, as AI opens up opportunities for global learning.

By democratizing access to high-quality educational content, AI can help bridge educational gaps and foster inclusivity.

There is now an increasing amount of evidence in research showing that AI-enabled tutoring can improve the learning process for students. In fact, the field of Intelligent Tutoring Systems has been the subject of academic research for over 30 years, but facing practical constraints in implementation at scale, which may now be resolved thanks to next generation AI for education.

The field investigates learning wherever it occurs, in traditional classrooms or in workplaces, in order to support formal education as well as lifelong learning. It brings together AI, which is itself interdisciplinary, and the learning sciences (education, psychology, neuroscience, linguistics, sociology, and anthropology) to promote the development of adaptive learning environments and other AIEd tools that are flexible, inclusive, personalised, engaging, and effective. At the heart of AIEd is the scientific goal to “make computationally precise and explicit forms of educational, psychological and social knowledge which are often left implicit.”

Luckin, R. and Holmes, W., 2016. Intelligence unleashed: An argument for AI in education.

In addition to systems designed to automate the tutoring process and replace human tutors, there is another approach that uses AI to identify appropriate tutors for students based on the kind of support they need in their learning. These systems are called “learning network orchestrators”. Open Tutor (OT) developed by Beijing Normal University is a good example of such a system. When a student needs clarification on classroom content, they can use the OT app to connect with human tutors, who are rated by other students, for a 20-minute, one-on-one session. Although relatively costly to scale due to its human tutor involvement, OT uniquely empowers the learner to decide what they want to learn, with AI playing a supportive rather than directive role.

By democratizing access to high-quality educational content, AI can help bridge educational gaps and foster inclusivity.

Personalized Learning and Adaptive Feedback

AI can enable highly personalized learning experiences by analyzing student data and tailoring learning paths to meet individual needs. Adaptive learning systems can adjust the difficulty and pace of content based on a student’s performance, ensuring that each learner is appropriately challenged. This personalized approach enhances engagement and motivation, as students receive content suited to their specific learning level and style.

Furthermore, AI provides immediate, targeted feedback, allowing students to quickly identify strengths and weaknesses and focus on areas requiring improvement. This level of personalization and responsiveness fosters deeper learning and helps students make consistent progress.

Reshaping Teaching Methodologies and Reducing Administrative Burden

AI is transforming traditional teaching methodologies, providing educators with powerful tools to enhance their instructional methods and streamline routine tasks. AI’s potential is great for helping educators design their courses and adjust them to changing circumstances or characteristics of cohorts of students.Through (semi-)automated grading systems or automatic assessments of deterministic deliverables such as computer code or exercises in STEM, interactive lesson creation, and real-time tracking of student progress, AI could reduce the administrative workload for teachers, allowing them to dedicate more time to direct student interaction.

By freeing educators from time-consuming tasks that do not strengthen the learning process for students, AI can enable them to focus on providing tailored support, encouraging critical thinking, and fostering deeper understanding. Additionally, AI-generated insights into student progress help teachers tailor their approaches and identify students who may need additional guidance.

Revolutionizing Assessment for Targeted Support

AI stands to revolutionize assessment practices by introducing tools that make evaluations more efficient, accurate, and personalized. AI-powered assessment tools analyze student responses in real-time, providing instant feedback on comprehension and learning progress. This data-driven approach allows educators to identify students who may be struggling and offer timely, targeted support, ultimately enhancing learning outcomes.

By moving beyond traditional exams and enabling ongoing, formative assessment, AI helps educators create a more responsive and supportive learning environment.

Increased Efficiency and Productivity in Learning

AI assists both students and educators by automating routine tasks, such as note organization, reference management, and study planning. This automation improves productivity, enabling students to handle heavy workloads more effectively while allowing educators to allocate their time to higher-level teaching tasks.

With AI taking over repetitive tasks, students can focus more on understanding and exploring content in-depth, enhancing the overall learning experience. Efficiency gains through AI make studying less overwhelming and help students maintain consistency and focus in their academic efforts.

Support for Complex Data Analysis and Research

AI empowers students and researchers to engage in sophisticated data analysis, enabling them to uncover patterns and insights in large, complex data sets. AI-powered tools for data visualization, predictive modeling, and statistical analysis make it possible for students to conduct high-level research with minimal technical expertise. This is especially beneficial in data-intensive fields such as science, engineering, and social sciences, where AI helps learners explore data-rich environments that enhance their understanding of complex phenomena.

By making advanced analysis tools accessible, AI helps students develop crucial research skills that would otherwise be challenging or more time-consuming to attain.

Enriched Learning Through Simulation and Real-World Applications

AI-driven simulations and virtual environments offer students hands-on learning experiences that bring theoretical knowledge to life. Students can explore complex scenarios, conduct virtual experiments, and engage with real-world applications in a controlled setting. For example, medical students can use AI simulations to practice procedures safely, while business students can model market dynamics to understand economic principles. These experiential learning opportunities allow students to develop problem-solving skills and build practical competencies, better preparing them for real-world challenges in their respective fields.

Support for Accessibility and Inclusive Learning

AI has a significant role in making learning more inclusive by catering to students with disabilities and those facing other learning barriers. With tools like speech-to-text and text-to-speech software, language translation, and visual aids, AI supports accessibility and accommodates diverse learning needs. These technologies enable students with physical, language, or cognitive challenges to participate fully in educational activities, promoting equal opportunities. Additionally, AI can tailor content to various learning styles, ensuring that education is more inclusive and responsive to individual needs.

How AI can hinder human learning

Over-Reliance on Technology and Diminished Human Interaction

The increasing integration of AI in education risks fostering an over-reliance on technology, potentially reducing the essential human interactions that are crucial for well-rounded student development. While AI offers personalized learning tools and efficient feedback mechanisms, these may lack the depth and nuance of human guidance. For example, AI-powered grading systems can efficiently assess assignments but may fall short in offering the individualized feedback that helps students understand their strengths, weaknesses, and areas for improvement.

A balanced approach that combines AI-driven efficiency with human interaction is vital for maintaining a holistic learning experience.

The human element in education extends beyond mere information delivery, encompassing mentorship, emotional support, and the development of social and communication skills. A balanced approach that combines AI-driven efficiency with human interaction is vital for maintaining a holistic learning experience.

Reduced Engagement and Critical Thinking

AI tools designed to enhance learning can, paradoxically, reduce active engagement and critical thinking. The convenience of AI in accessing information and completing tasks may encourage passive consumption, with students relying on AI-provided answers rather than developing essential skills of analysis, evaluation, and independent thought. For instance, AI-powered writing tools that suggest structure and grammar improvements may impede students' growth in organizing and refining their ideas if they become overly dependent on these tools.

Active intellectual engagement is critical for deep learning, and excessive reliance on AI risks turning learning into a passive experience.

Additionally, while AI simulations offer insights into potential outcomes, they may oversimplify complex problems, limiting students’ opportunities to grapple with real-world complexities and develop problem-solving skills. Active intellectual engagement is critical for deep learning, and excessive reliance on AI risks turning learning into a passive experience.

Ethical Concerns and Algorithmic Bias

The integration of AI in education raises significant ethical considerations, particularly concerning data privacy and potential algorithmic bias. AI systems frequently collect extensive data on student performance and behavior, raising concerns about data protection and the misuse of sensitive information.

It is notoriously difficult to design AI systems with well-formed boundaries and constraints aimed at promoting fairness, equity and integrity

Furthermore, AI algorithms, trained on historical data, may inadvertently reinforce and amplify existing social and educational inequalities. Algorithmic biases can result in unfair treatment of marginalized students or skewed educational opportunities, perpetuating disparities. Addressing these challenges requires establishing transparent guidelines for data usage, prioritizing privacy, and designing AI systems that actively promote fairness and equity in educational outcomes. Of course, that is easier said than done as it is notoriously difficult to design AI systems with well-formed boundaries and constraints aimed at promoting fairness, equity and integrity.

Accessibility and Equity Challenges

AI-powered educational tools, while promising, may exacerbate existing inequities in access to quality education, particularly for disadvantaged or marginalized students. The reliance on AI in learning environments assumes access to reliable internet, digital devices, and adequate digital literacy skills, all of which may be lacking for certain populations.

Without addressing the digital divide, students from lower socioeconomic backgrounds could be left behind in an increasingly AI-driven educational landscape. Ensuring equitable access to AI technologies and designing implementation strategies that account for socioeconomic differences are essential for creating an inclusive and accessible educational system.

Potential Job Displacement and Changing Educator Roles

The adoption of AI in education raises concerns about potential job displacement for educators and support staff. While AI is not intended to replace teachers, its ability to automate tasks like grading and administrative functions could shift traditional roles within educational institutions.

Educators may need to adapt by focusing on providing skills that complement AI's capabilities, such as mentorship, creativity, and emotional intelligence, which AI cannot replicate. This shift in roles underscores the need to support educators through training and resources that enable them to integrate AI effectively and develop skills that foster human-centered, adaptive learning environments.

Limited Understanding of Complex Subjects

AI systems, while powerful in processing large amounts of data, have limitations in understanding complex and nuanced scientific or philosophical subjects. AI typically operates based on existing data and may struggle with novel or emerging topics where there is limited information. As a result, while AI can assist in research and provide foundational knowledge, it may not achieve the depth of comprehension, critical thinking, and adaptability that human experts bring to scientific inquiry and education.

AI typically operates based on existing data and may struggle with novel or emerging topics where there is limited information.

This limitation underscores the importance of human involvement in fields that require deep, interpretative understanding and emphasizes the need for students to develop these skills independently.

Risks of Misuse and Academic Dishonesty

The ease of access to AI tools also raises concerns about academic integrity and potential misuse. AI-powered writing and content-generation tools, while intended to aid students in refining their work, can be exploited to produce entire assignments with minimal student input, undermining genuine learning and understanding. This risk of misuse calls for clear guidelines on AI usage in educational settings and emphasizes the importance of fostering an environment that prioritizes academic integrity. Educators can mitigate these risks by developing assessment methods that go beyond AI-generated content, encouraging original thought, critical thinking, and student accountability.

Why detection and banning AI is not the right path

Relying on detection technologies to police AI usage raises both ethical and pedagogical concerns. Ethically, detection tools lack the nuance to fairly assess AI use, often misclassifying students’ work and disproportionately impacting non-native speakers or those with fewer resources. Yet beyond these ethical issues, a reliance on detection shifts educators' roles from fostering learning to policing behavior, inadvertently creating a climate of surveillance rather than curiosity.

When educators focus on detection, they risk prioritizing rule enforcement over the very goals of education: critical thinking, exploration, and genuine engagement with content.

When educators focus on detection, they risk prioritizing rule enforcement over the very goals of education: critical thinking, exploration, and genuine engagement with content. This shift not only stifles students’ curiosity but may also discourage them from asking questions, experimenting with new ideas, and developing their own perspectives—all key elements of growth. In fostering a supportive environment where responsible AI use is guided and encouraged, educators can better nurture students’ critical thinking and ensure AI becomes a tool for learning, not a barrier to it.

Detection is unreliable

The research conducted by Sadasivan et al. (2023) shows that generative AI detectors, which are designed to identify AI-generated language patterns, are not foolproof and can be unreliable in many real-world scenarios.

One reason for this is the vulnerability of these detectors to paraphrasing attacks, where even minor rephrasing of generated text can significantly impact the performance and accuracy of the detection system.

Research from Perkins et al. (2023) indicates that AI detection tools are vulnerable to adversarial techniques designed to disguise AI-generated content, raising concerns about their effectiveness in maintaining assessment security. A few examples of adversarial techniques:

Intentional Errors: Incorporating errors like typos, grammatical inconsistencies, and stylistic irregularities can mimic human writing and mislead detectors.

Complexity Adjustment: Tailoring the complexity of text to match typical human writing styles can help AI-generated content blend in.

Prompt Engineering: Carefully crafting prompts to guide AI tools in generating text that strategically incorporates elements to challenge detection capabilities.

Recursive Paraphrasing: Using automated paraphrasing tools to repeatedly rephrase the generated text, making it increasingly difficult for detectors to identify.

Spoofing: Deliberately writing in a way that triggers false detection as AI-generated content.

Mitchell et al. (2023) found that the detection rate for AI-generated text dropped drastically from 70.3% to 4.6% after using an Automated Paraphrasing Tool (APT).

Similarly, Weber-Wulff et al. (2023) observed major drops in accuracy rates after applying APTs and translation tools.

Perkins et al. (2024) argue that if students with access to advanced AI tools and knowledge of adversarial techniques use these methods to generate their academic work, it would undermine the assessment process and create an unfair advantage.

Last, the potential for AI detectors to misclassify text written by non-native English speakers as AI-generated, due to their reliance on standardized linguistic metrics, highlights the risk of further exacerbating existing inequalities in academia.

Detection tools are not ethically acceptable

As AI tools become widely available and will inevitably become integral to learning, the ethical implications of AI detection technologies must be considered, particularly regarding their potential to unfairly target vulnerable groups. Detection tools often lack the nuance needed to accurately distinguish between genuine and AI-generated content, especially when applied to the diverse linguistic and cultural backgrounds found in educational environments.

Educational Focus on Punishment Over Growth: Detection technologies inherently focus on identifying misconduct, which can shift educational priorities from fostering understanding to policing behaviors. By prioritizing detection, educators risk creating a punitive environment that stifles the very curiosity and engagement that AI tools, used ethically, could enhance. This conflict is especially stark for institutions claiming to foster critical thinking and exploration but relying heavily on surveillance.

Undermining Academic Trust: Over-reliance on detection technologies can damage the trust between educators and students. When students feel that their work is subject to automated, potentially flawed scrutiny, it can foster a culture of surveillance rather than genuine learning. This atmosphere can discourage creative, authentic engagement with assignments, as students worry more about detection than demonstrating original thought and critical analysis.

Bias Against Non-Native Speakers: AI detectors often rely on specific linguistic patterns associated with native English speakers, placing non-native students at risk of false positives. This bias results from detection algorithms being trained on standardized language structures, which do not always account for diverse writing styles and expressions. As a result, non-native speakers may find their work flagged as AI-generated simply because of unique syntax or phrasing, rather than any actual misuse of AI.

Asymmetrical Accuracy Across Content Types: AI detection tools show varying degrees of accuracy based on the medium being analyzed. Visual content, like images and video, is often easier to detect due to unique pixel patterns, compression artefacts, and other digital fingerprints left behind by generative AI tools. Audio detection, too, benefits from certain markers, such as speech synthesis artefacts, which make AI-generated content distinguishable from authentic human speech. However, when it comes to text, AI detection faces unique challenges, as generative AI excels at creating human-like language patterns. This makes reliable detection much more difficult, as AI-generated text can closely resemble authentic writing with few detectable markers. This asymmetry means that students in text-based disciplines (humanities and social sciences) may face disproportionate scrutiny and punitive measures, even when detection tools are less reliable in their fields.

Accessibility Inequities: Students from disadvantaged backgrounds may lack access to advanced AI tools, leading to a gap between those who can afford adaptive strategies or tooling that is effective in hiding improper use of AI and those who cannot. This creates an uneven playing field where students with fewer resources may be unfairly flagged or penalized if they attempt to use accessible AI tools, despite doing so within ethical boundaries.

Educators should resist relying on automated decisions to assess AI usage. Detection tools alone cannot define improper use, let alone prioritize the student’s learning over institutional convenience.

The current limitations of AI text detectors suggest that their use for determining academic misconduct should be approached with great caution. In fact, with what we know thus far, the indiscriminate adoption of such tools would be profoundly misguided, contrary to scientific methods and totally unethical for learners.

Weaving together human and machine intelligence

Hybrid intelligence for education

The future of education lies not in pitting human intelligence against artificial intelligence but in fostering a collaborative approach that brings out the strengths of both. When thoughtfully integrated, AI can be a powerful ally in the learning process, enhancing both teaching and student engagement without undermining foundational educational principles.

When thoughtfully integrated, AI can be a powerful ally in the learning process, enhancing both teaching and student engagement without undermining foundational educational principles.

Consider, for example, a history class project where students are asked to analyze the social and economic impacts of the Industrial Revolution. The educator could guide students to use AI tools for initial research—perhaps suggesting an AI-powered summarization tool to sift through large volumes of primary source documents or scholarly articles, allowing students to quickly access relevant content. However, rather than relying solely on AI-generated summaries, the educator would encourage students to verify these sources and challenge any AI-suggested insights by cross-referencing with additional historical data or discussing as a group the nuances AI might miss, such as regional variations or biases in sources.

To deepen critical thinking, the educator could incorporate structured classroom discussions, where students debate AI’s interpretations and explore differing perspectives. AI might serve as a “starting point” in this discussion, sparking questions that students then pursue using traditional research methods, like examining historical records or performing close readings of texts. This collaboration between human and machine thus enables students to explore more complex questions than would be possible with traditional research alone, all while ensuring they develop critical thinking skills by challenging and adding to AI’s output.

Similarly, in a science class, an educator might use AI tools to simulate real-world experiments, such as predicting outcomes in climate change models. Students can explore variables, test hypotheses, and examine projected outcomes using AI simulations. However, the educator would supplement these activities with hands-on experiments or case studies, encouraging students to compare AI predictions with empirical evidence and real-life case scenarios. By doing so, students learn to treat AI as a resource for exploration and hypothesis-building, yet still rely on human insight, experience, and real-world observation for accurate conclusions.

This kind of balanced approach fosters a learning environment where students benefit from AI’s efficiency and capacity for handling vast information, yet are consistently encouraged to think critically, verify, and expand upon what AI provides. In blending traditional teaching methods with AI-powered tools, educators prepare students for a world where human and machine collaboration is essential—helping them develop both the technical skills to use AI responsibly and the cognitive skills to think beyond it

The responsibility of educators

The responsibility of educators is to adjust their methods, redesign their courses and find ways to make AI useful to the process of learning. That requires work and focus on each aspect of the learning process at each level of education. If anyone expects to be able to avoid that effort by “simply” proclaiming a ban on AI with the excuse of academic integrity, they are sorely mistaken, and every single student will have the moral right to challenge the motivations behind such measures since there is obvious secondary benefit in keeping things as they are and minimizing the work and investment in continuous improvement of education. Let alone when educators themselves seem quite interested in the capabilities of AI when it comes to grading student work. In that facet of education, there is significant work to be done in order to make grading useful as a tool in the learner’s journey.

Educators therefore have the responsibility to:

Promote responsible and ethical use, by educating students and faculty about the capabilities and limitations of these tools, as well as by highlighting for students and faculty the dimension of self-sabotage that is involved in faking mastery and faking its assessment through improper use of AI.

Design new courses that make intelligent use of AI while creating disincentive and negative side-effects in improper use of AI for learning activities and homework.

Develop new teaching methods and assessment strategies by exploring methods that are less dependent on the delivery of content and more geared towards the exploration of bodies of knowledge and the execution of activities that promote effective learning leaning on neuroscience and research such as presented by Andrew Huberman in his podcast or by experts in learning and human cognition like Stanislas Dehaene.

Foster a culture of academic integrity by emphasizing ethical practices and genuine collaboration between educator and learners in a process called education & learning instead of relying on outdated approaches of dominant positions, punishment-reward and harsh counterproductive feedback. Education is about growth of all human capabilities and forms of human intelligence, not about survival of those most capable of overcoming hurdles and bad treatment.

Provide Faculty with Continuous AI Training and Resources by providing to fellow educators and teaching staff the resources needed to adapt rapidly changing tools and technologies, including in popular LMS and other solutions used in education.

AI Literacy for Educators and Students

To responsibly integrate AI in education, AI literacy must become a priority. AI literacy refers to understanding AI’s capabilities, ethical implications, and limitations. Both educators and students need a foundational understanding of how AI works to use it effectively and ethically.

For Students: AI literacy includes understanding when and how to use AI in research and learning. Students should learn to evaluate AI outputs critically, understanding the risks of over-reliance and potential biases.

For Educators: Educators need training to guide AI’s use in the classroom and assess student work fairly. Workshops and resources on AI applications and ethical considerations can help educators model responsible AI use.

Making AI literacy an educational priority ensures that AI tools are used as intended—enhancing learning while fostering integrity and critical thinking.

What we need to do

Educators stand at a critical juncture in the age of AI, tasked with preparing students for a world where artificial intelligence will be integral to personal, academic, and professional growth. For teachers, professors, and teaching assistants, embracing AI responsibly means guiding students beyond passive tool use toward informed, ethical engagement.

Restrictive approaches to AI in education will not foster academic excellence or protect integrity.

Just as regulation alone cannot drive deep technological leadership or innovation within the European Union—no more than traffic laws or conventions were the foundation of automotive excellence—restrictive approaches to AI in education will not foster academic excellence or protect integrity. To deny AI’s role in modern education is to leave learners behind, widening an existing gap between those who have had the opportunity, curiosity, and means to develop AI literacy and those who have not. Such an approach risks repeating the mistakes of past digital and global transformations, where only a fraction benefited, leaving others disenfranchised and prone to extreme responses to economic and social exclusion.

By embracing AI as a critical educational tool, we equip students to become active, responsible, and ethical users of this new technology—ensuring they are not only prepared for the future but are empowered to shape it.

Instead, educators must seize this opportunity to teach learners what AI is, how it works, when and how to use it, and why it can either help or harm the learning process. We need balanced guidelines on ethical AI use that empower educators to harness AI responsibly, preserving academic integrity and inclusivity. This approach is far more valuable than denying AI’s presence in education, which ultimately does little to prepare students for a future where AI is woven into nearly every aspect of life.

By embracing AI as a critical educational tool, we equip students to become active, responsible, and ethical users of this new technology—ensuring they are not only prepared for the future but are empowered to shape it.